A New Era for Forecasting & Planning?

Two years ago, in an article for Deloitte, I explored the potential of Large Language Models (LLMs) in planning & forecasting. It was the early days of Generative AI, and my approach was to be cautious amid considerable hype.

What did I get wrong, and what did I get right?

Looking back, I was too conservative. Today, I would say we are entering a new era for forecasting and planning.

My Original Prediction

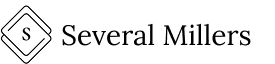

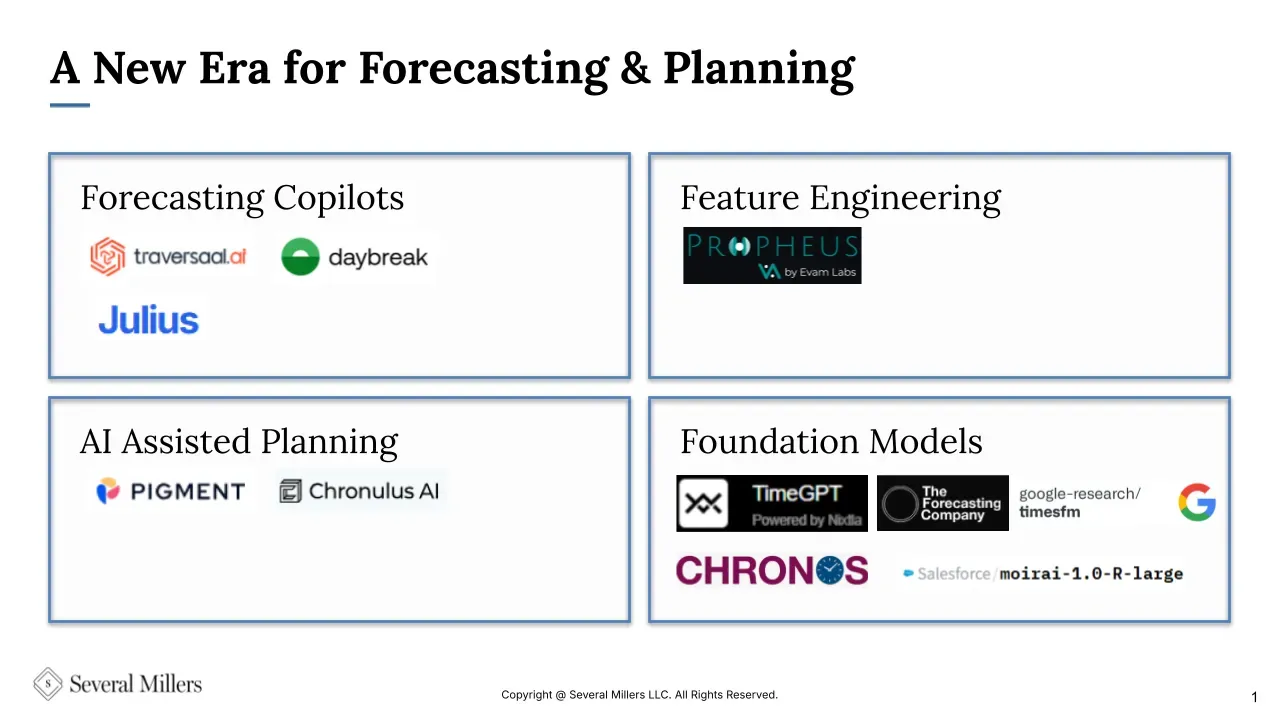

My initial article predicted three ways LLMs would support better forecasting and planning:

- Forecasting Code Gen (Forecasting Copilots, LLMs assisting in writing code for forecasting.).

- Structuring Unstructured Data (e.g. Feature Engineering, using LLMs and embedding models to transform unstructured data into new features for forecasting models).

- Assisting the Planning Team (AI Assisted Planning with agents supporting planning teams by gathering, organizing, and summarizing both structured and unstructured data, and providing reports & insights.).

Notably absent was the idea of LLMs directly producing forecasts. At the time, I aimed to dispel a common misconception that went something like this:

“Previously we’ve used “AI” for forecasting, now this new, better “AI” will make even better forecasts.” - a common misunderstanding in the early days of Generative AI

The reality has proven more nuanced. LLMs are now being used directly for forecasting through innovative approaches like those from Chronulus AI. Furthermore, LLMs have spurred the development of foundation models for forecasting—pretrained models built with a similar approach and architectures.

What I Got Right

Forecasting Copilots:

Two years ago, general models like ChatGPT and GitHub Copilot weren't great at writing forecasting code. I predicted the emergence of forecasting-specific copilots.

Since then, some of the issues I faced with general models have been mitigated by tools that make it easier to inject online documentation into your context.

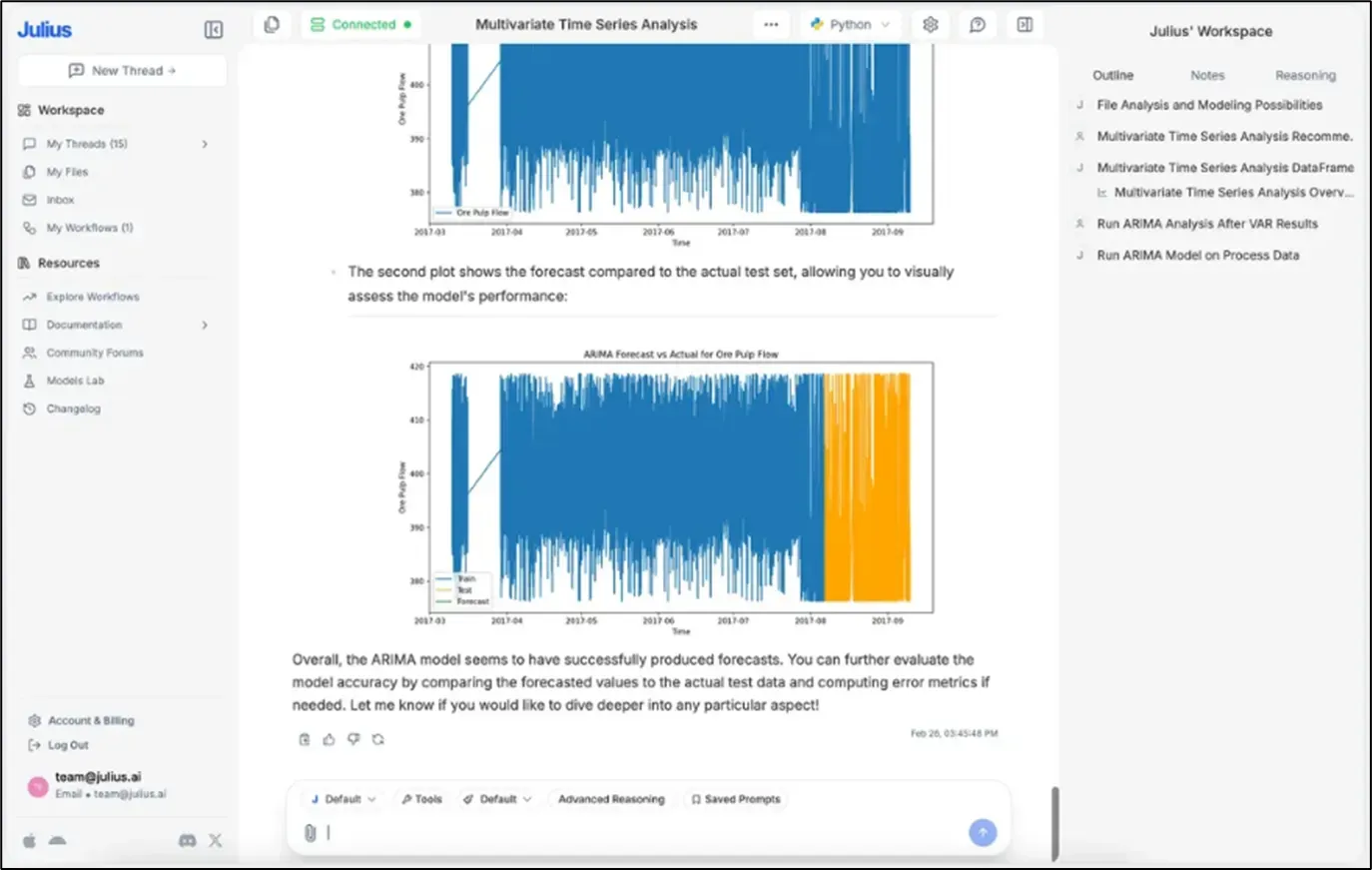

Perhaps more interesting though, specialized tools for data science and forecasting have emerged. Traversaal and Juliusare two interesting examples:

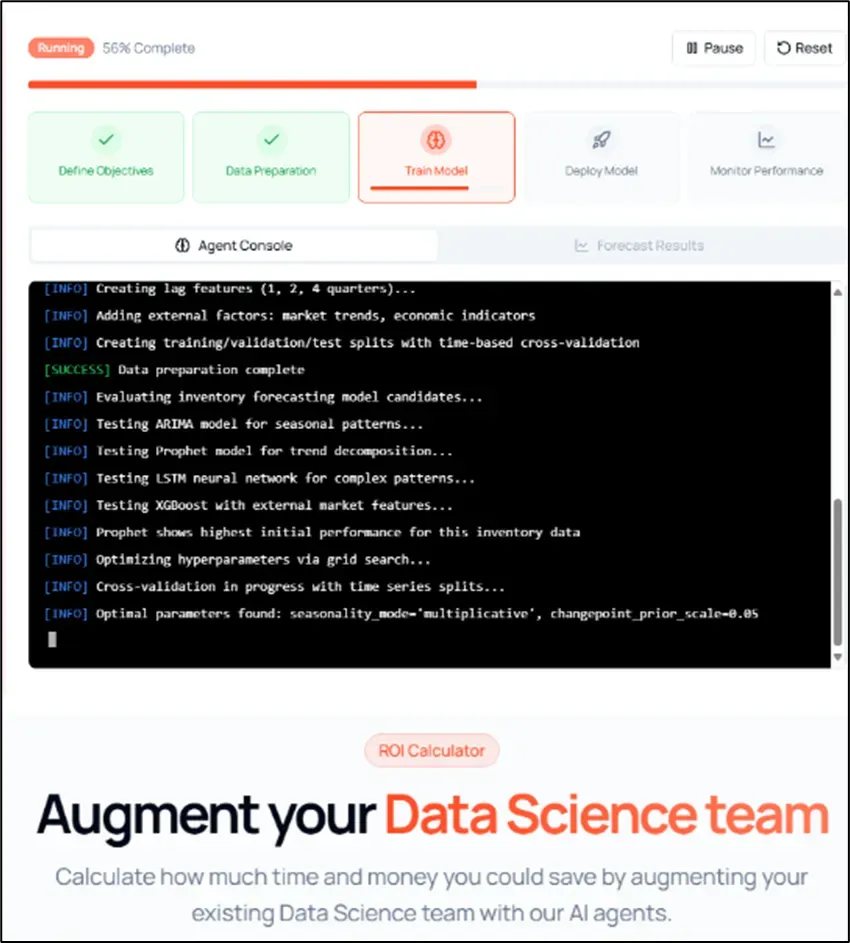

Screenshot from the Traversaal website

Screenshot from the Julias website

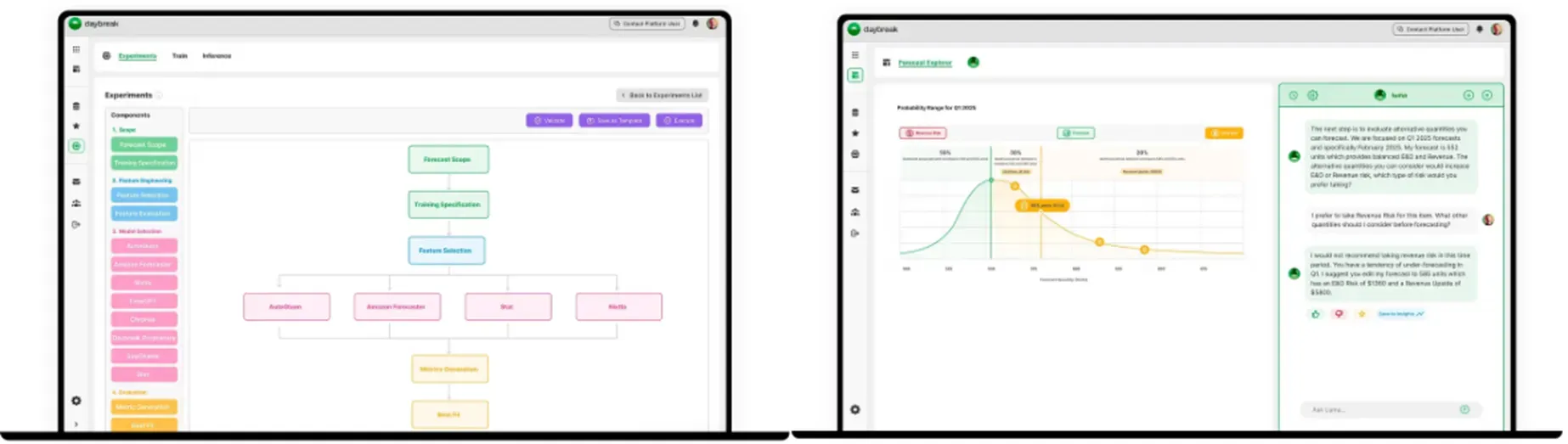

Additionally, Daybreak presents a compelling vision of "AI-First Supply Chain Planning" through a no-code model-building platform and Agentic Chat.

Screenshots from the Daybreak website

Feature Engineering:

Two years ago, I saw the advancement of embedding models, and predicted increased use of these model to turn unstructured data into features for forecasting.

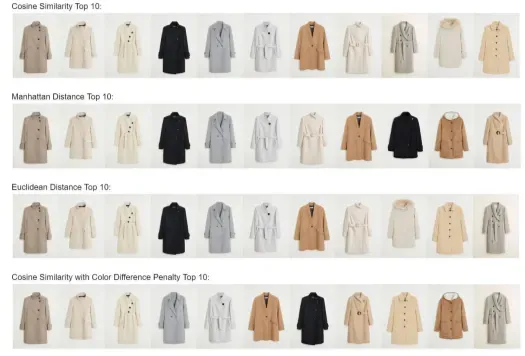

This has since been applied at scale by large retail organizations. A common initial use case is employing embeddings to identify similar products. This helps capture effects like cannibalization or generate forecasts for new products based on similar past sales.

An example from a case study of a retailer using embeddings to identify similar products to support a forecasting use case

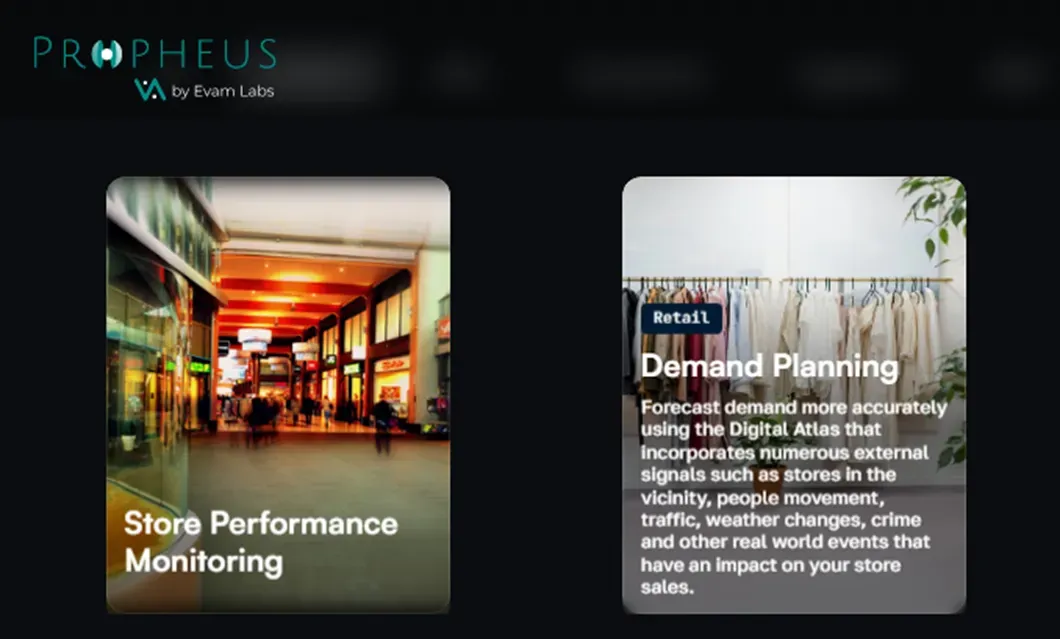

Beyond this intro use case, Propheus AI is offering to create complex embedding features that “capture multimodal signals about every place on Earth. These signals include demographics, human mobility data, news, information captured within LLMs, satellite imagery, weather and places.” The features map to your stores to enhance store-level forecasting.

A screenshot from the Propheus AI Website

What I Got Wrong

AI Planning Assistants:

2 years ago, I envisioned an S&OP process largely managed by AI agents:

“Imagine a chatbot that contacts the sales lead for a major account seven days before the demand review meeting, every month, like clockwork. It follows up on action items, provides a summary of key takeaways from last month’s meeting, and highlights where recent sales numbers diverged most from the forecasts. It then introduces new forecasts and solicits input, emphasizing areas to focus on based on previous discussions and results. These interactions occur simultaneously with all stakeholders for the demand review. Their inputs are structured, organized, and filed. When multiple stakeholders raise a similar point, the LLM assistant highlights areas of consensus in its automatically generated pre-read that is sent to all participants before the meeting.” - The Helping Hand in Sales and Operations Planning | Deloitte US

Unfortunately, this vision hasn't fully materialized (as far as I am aware). I recently came across Pigment, which promotes "AI-First Integrated Business Planning", however it's unclear if their offering extends to the full scope I envisioned. (If you have experience with Pigment, I'd love to hear about it!)

A screenshot from the pigment website

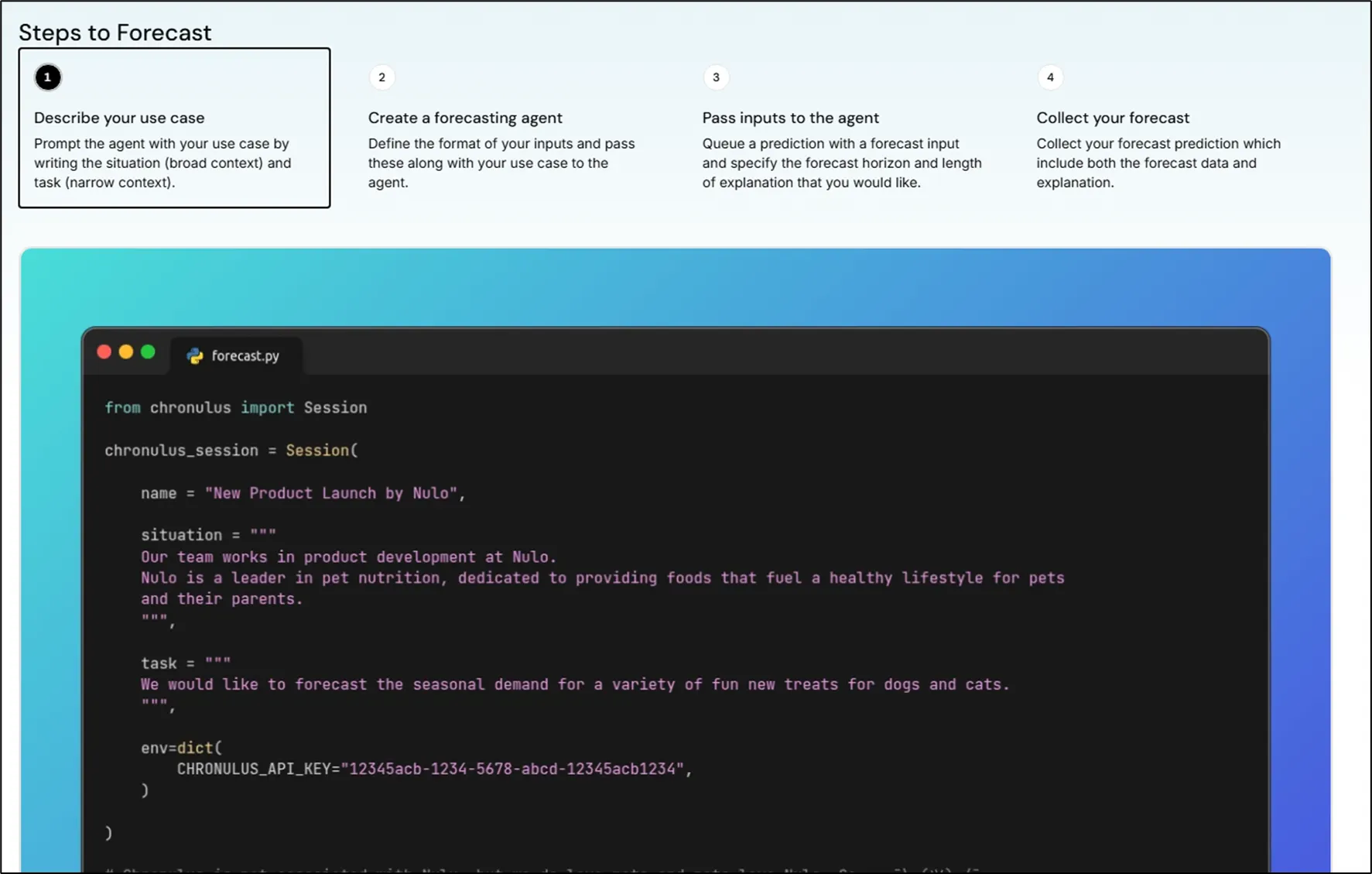

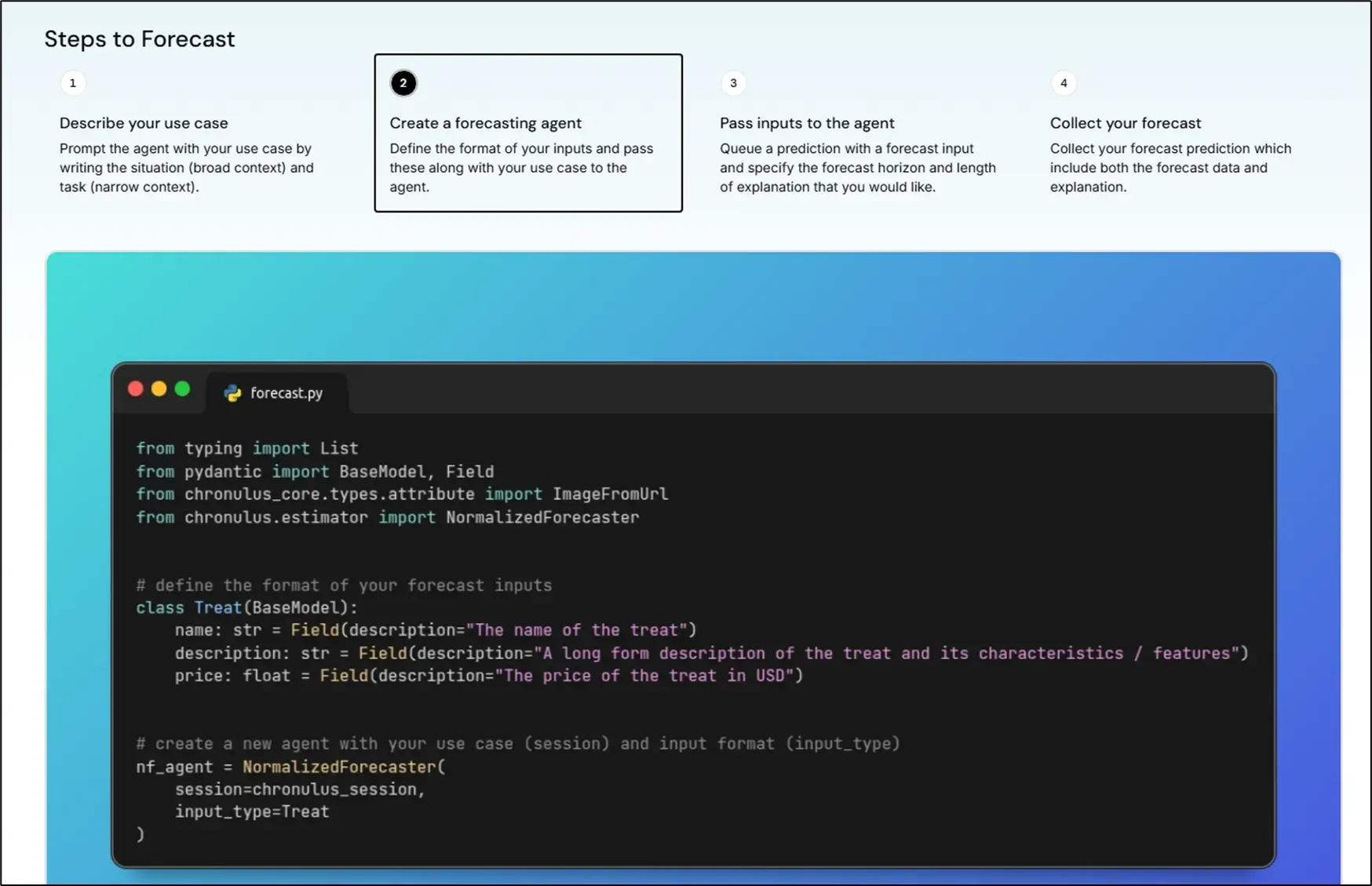

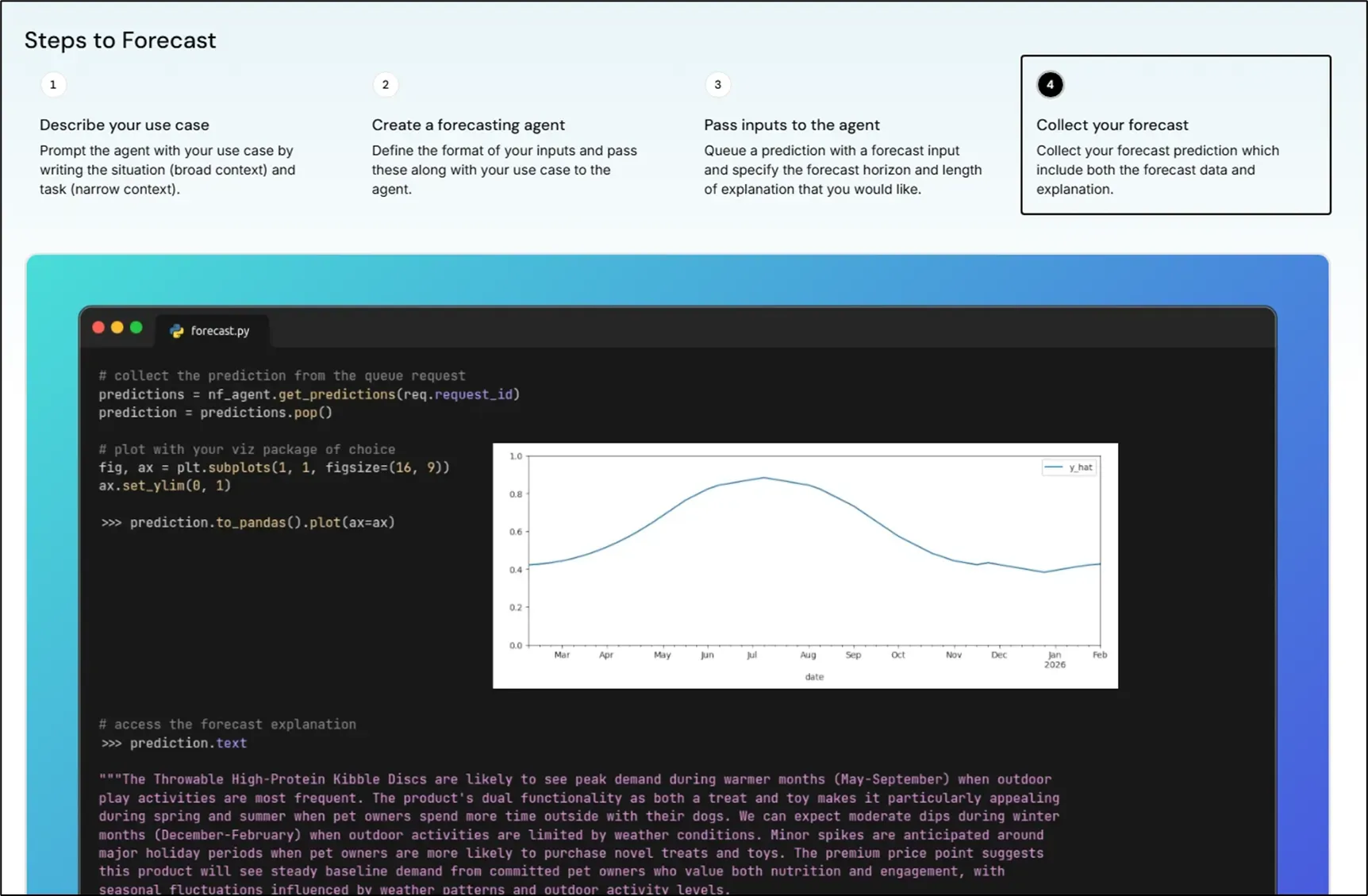

Despite the slower progress on S&OP agents, I've been pleasantly surprised by Chronulus AI's unique and innovative use of LLMs for direct forecasting—something I didn't predict.

While the full mechanics are proprietary, Chronulus appears to leverage LLMs' ability to generate coherent numerical patterns, as demonstrated in articles like: Can We Use DeepSeek-R1 for Time-Series Forecasting?

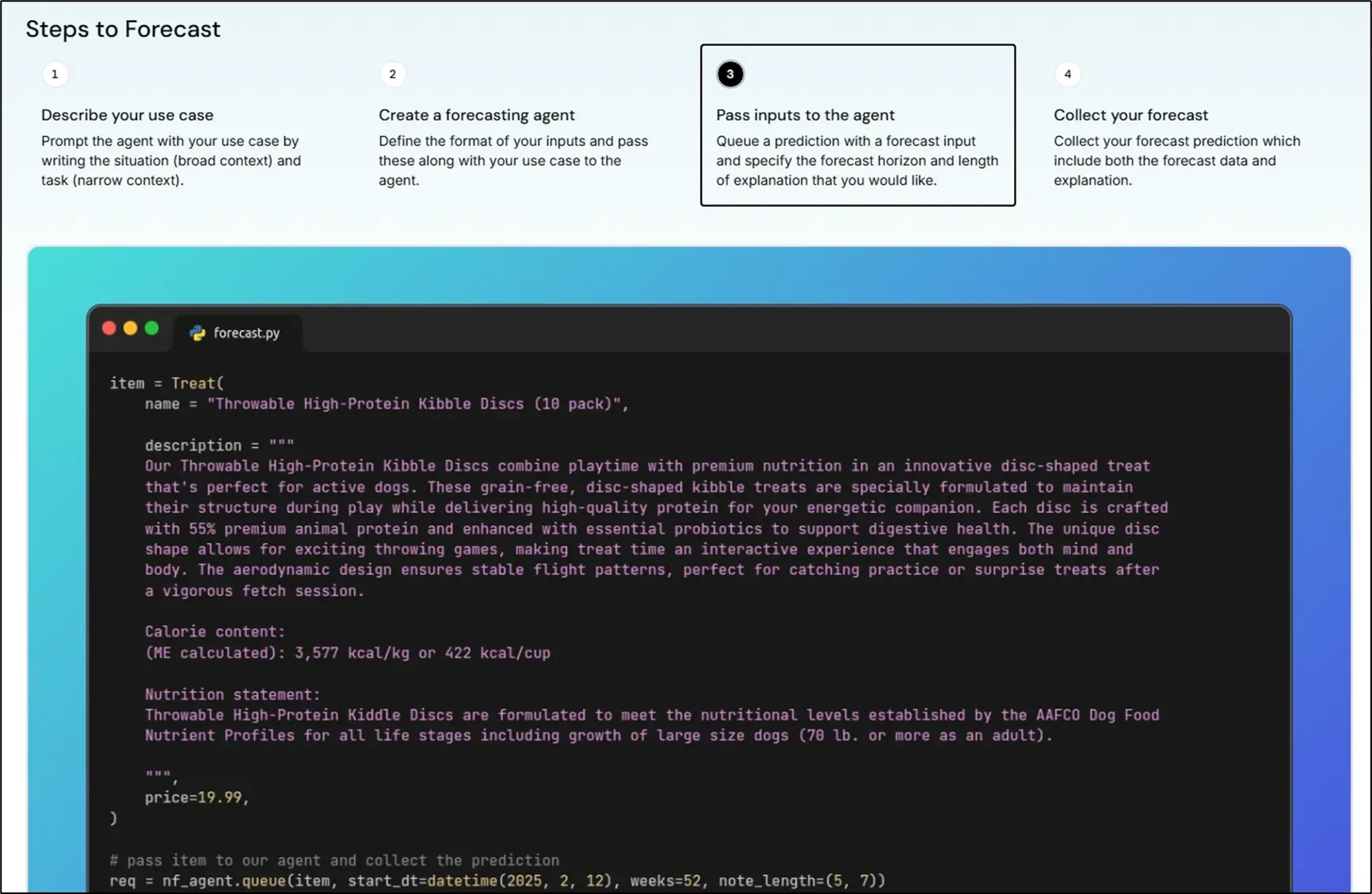

Cleverly, Chronulus semploys a framework where an LLM generates a normalized forecast (between zero and one) using any input data (sales of other products, descriptions, pricing, weather, location, etc.). This creates a sales pattern that can then be scaled for different scenarios.

This approach is particularly interesting for new product forecasting, where the primary need is to generate a representative pattern considering the situation from all angles and then produce scenarios amid many unknowns.

Foundation Models:

Finally, I’ll briefly touch on foundation models. There's much written about these, and for deeper dives, I recommend checking out The Forecaster – Medium.

When I wrote my original article, the first time series foundation model, TimeGPT, had just been released. I included a small acknowledgment in the initial figure of that article. Since then, foundation models have firmly established their place in forecasting discussions.

I won’t wade into discussions on their effectiveness, but I will say that in the recent VN1 competition, a foundation model was used by the fifth-place winner as part of an ensemble, and post competition multiple articles have been published showing the use of foundation models to achieve top scores on the VN1 dataset (see this link and this link in the references).

What’s Next

I'll save my new long-range predictions for a future article and instead close with immediate next steps.

For companies aiming to stay at the cutting edge of this rapidly evolving tech landscape, regular pilots and small testsare crucial to explore new offerings firsthand and assess what works. We offer this as a service.

For those more interested in quickly implementing proven, cost-effective approaches, there's a unique opportunity today that I look forward to sharing in an upcoming article.

If either of these scenarios resonates with you, reach out at jesse@severalmillers.com or book a meeting on our calendar. I'd love to chat.

References:

https://www.chronulus.com/agents/forecasting-agents

https://www.youtube.com/watch?v=iOPVutyewW0

https://aihorizonforecast.substack.com/p/can-we-use-deepseek-r1-for-time-series

https://medium.com/the-forecaster

https://nixtla.r-universe.dev/articles/nixtlar/vn1-forecasting-competition.html